What is a Petabyte? Definition, Uses, and Its Role in Data Storage

On this page:

Petabyte Definition: What is a petabyte?

1. Understanding the size of a petabyte

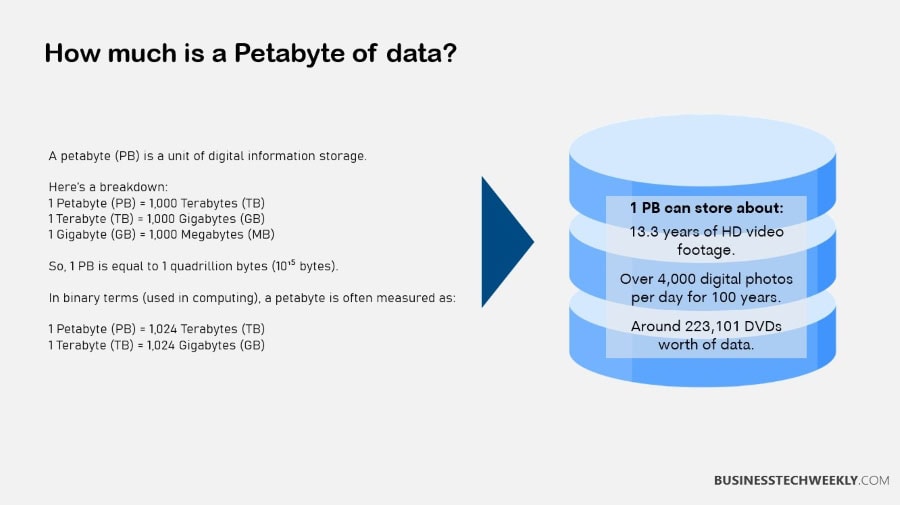

A petabyte is a gigantic measure of digital storage — approximately 1,000 terabytes or 1,048,576 gigabytes. In simple terms, a petabyte equals 1 quadrillion bytes, or specifically, 1,125,899,906,842,624 bytes.

That’s an incredible amount of data, so let’s try to understand it with relatable examples.

In other terms, a petabyte could store over 200 million five-megabyte images. That’s like saving every image from everyone on all of their smart phones!

If we picture that in terms of movies, one petabyte stores about 20,000 movies in HD. That’s enough to stream continuously for more than 22 years, playing every film just once.

To give an idea for bibliophiles, a petabyte would be equivalent to 500 billion pages of double-spaced 12-point font. That’s enough to fill 20 million four-drawer filing cabinets!

Here’s what a petabyte can store:

- That’s the equivalent of 250,000 high-quality, 1 TB hard drives worth of data.

- The entire Library of Congress over 50 times.

- Enough songs to play continuously for over 2,000 years.

2. Comparing a petabyte to smaller units

When we compare a petabyte to smaller units, the scale is even more apparent. A gigabyte (GB) would be more typical for single files or smaller forms of data. A petabyte is much larger and beyond general storage requirements.

In data terms:

- 1 petabyte = 1,000 terabytes

- 1 petabyte = 1,048,576 gigabytes

Here’s a table of how much more data a petabyte can accommodate when compared to terabytes or gigabytes:

|

Unit |

Equivalent in Bytes |

Examples |

|---|---|---|

|

1 Gigabyte (GB) |

1 billion bytes |

A typical HD movie or 250 photos |

|

1 Terabyte (TB) |

1 trillion bytes |

Around 250,000 photos or 1,000 HD movies |

|

1 Petabyte (PB) |

1 quadrillion bytes |

Over 200 million photos or 200,000 HD movies |

3. How a petabyte is used

Petabytes are generally only relevant in large-scale systems where the ability to handle massive data sets is key.

These environments are data centers, server farms, or cloud storage farms. Take, for example, the amount of data that Google and Facebook process on a daily basis in petabytes.

Facebook’s Hadoop cluster famously hit 21 petabytes back in 2010, and that figure has only increased since then.

In practical terms, petabytes quantify the cumulative data on intricate networks.

For instance, they measure user engagement, conduct analytics, and maintain backups for billion-user platforms. In fact, streaming services like Netflix and cloud providers like AWS all rely on petabyte-scale massive storage. This empowers them to reliably deliver dynamic and rich content and services to their users.

Data measurement hierarchy

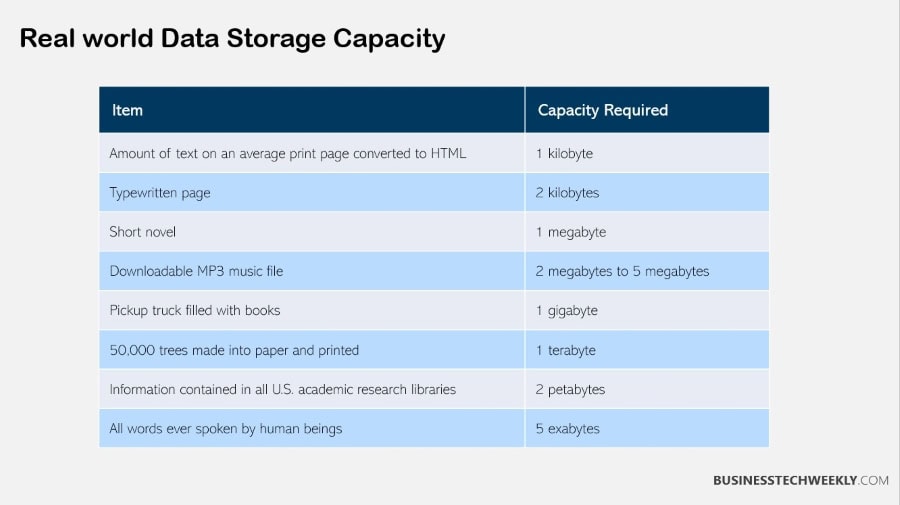

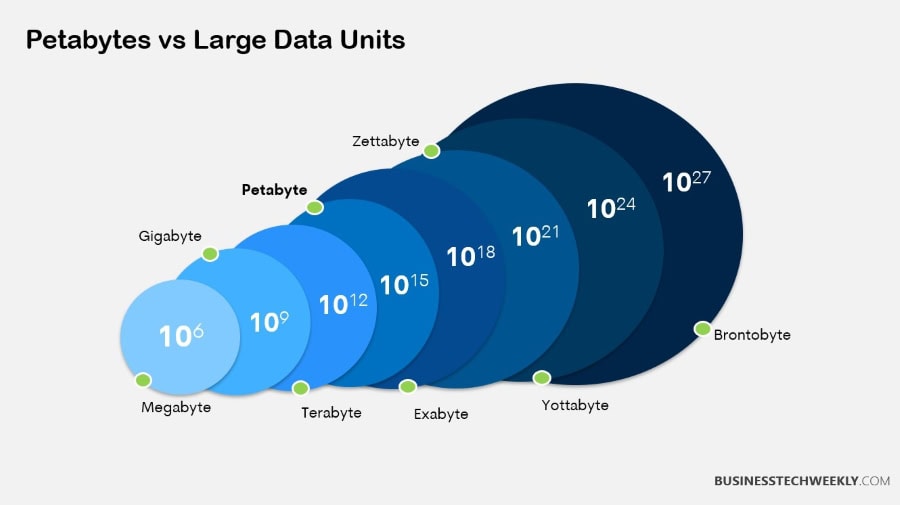

Data is often described in a pyramid, illustrating the data measurement hierarchy. It starts with the tiniest measures, such as bytes, and expands to the enormous amounts, including exabytes.

Each unit represents an exponential increase, reflecting the vast amounts of data storage capacity available as we move up the hierarchy.

Smaller data units

Smaller units are the building blocks of the data measurement hierarchy. A kilobyte (KB) is 1,000 bytes, but there’s a little bit of historical mix up on this.

In 1998, the International Electrotechnical Commission introduced a new term, “kibibyte (KiB)”, to explicitly define 1,024 bytes.

At the same time, “KB” was officially used to denote 1,000 bytes. These are relatively modest amounts of data, primarily consumed in contexts such as a text document.

Next is the megabyte (MB), which is 1,000 KB or 1 million bytes. This is where we begin to encounter bigger files, like a high-res image or a short audio clip.

Next in line is the gigabyte (GB), which is 1,000 MB. A GB is pretty much the baseline for smartphones today—plenty of room for pictures, applications, videos.

For example, a 1 GB file might be a full length HD movie. These smaller units are actually the backbone of some of the daily services we rely on.

They help us manage our own data, including storage of our emails and downloading of applications. At the same time, they give businesses a straightforward approach to monitor their file sizes.

Larger data units

Once we transition to larger units, the scale is staggering.

A terabyte (TB) is 1,000 GB and is a size you might see for external hard drives or cloud storage. For context, one terabyte could hold 217.8 DVD-quality films.

After terabytes is the petabyte (PB), which is 1,000 TB. To put that into perspective, a petabyte could store 223,101 DVD-quality films—an unfathomable figure.

Continuing up the ladder, exabytes (EB) are 1,000 PB, and zettabytes (ZB) are 1,000 EB.

These larger units are important as we produce more data than ever on a daily basis. Sectors such as genomics and meteorology produce petabytes of data daily.

By 2025, storage capacity will reach an estimated 175 zettabytes worldwide, according to experts.

Where does petabyte sit in the hierarchy?

A petabyte certainly has an impressive place in the data measurement hierarchy. It provides an important context for understanding the extreme data requirements of today’s enterprises.

It would require approximately 170 of the highest-end servers or the equivalent of 61,000 desktops to equal 1 PB of RAM.

Ten years ago, working with a petabyte of data was uncommon. Organizations routinely handle this magnitude today, particularly with the advent of big data platforms and cloud services.

For companies, navigating petabyte-scale data involves heavy investment in secure, scalable storage and comprehension of its threat or promise for expansion.

Practical uses of a petabyte

Data Storage Applications

Petabytes are the enterprise storage currency today, especially in the context of data storage capacity requirements.

Companies in almost every industry now face this onslaught of data — whether it’s sales records, customer interactions, product creation, or health records.

For e-commerce, social media, and other industries where rapid expansion happens overnight, petabyte-scale systems make storage expansion transparent and easy.

Platforms such as these create massive amounts of user-created content every single day. This covers everything from product catalogs to customer interaction logs, all requiring consistent, high-performance storage.

By 2025, the world will need to store a colossal 175 zettabytes (ZB) of data, which is tantamount to 175 billion terabytes (TB) or a mind-boggling 175 million petabytes (PB).

IDC

Of all industries, healthcare and finance are two that stand to gain the most from petabyte-sized storage. In the field of healthcare, medical imaging systems like MRI and CT scans generate large volumes of high-resolution data.Medical centers and research organizations rely on effective data storage to store and preserve their data.

This incredible storage guarantees that the data is available for future comparison or study. In finance, it’s paramount to safely record transaction details, stock exchange movements, and audit trails.

This makes meeting compliance requirements easier and helps foster more efficient day-to-day operations.

One major case of this storage need is in environmental science.

For example, the National Centers for Environmental Information (NCEI) oversees a mind-blowing 9 petabytes of environmental data. Researchers, policymakers, and advocacy organizations are continuously using this trove of information.

The dbSEABED effort produces high-resolution maps of the ocean floor with info through Oct 2023.

Tools such as the Actuaries Climate Index use those same large datasets to quantify the effects of our changing climates.

RELATED: What is the largest unit of information? Calculating data storage needs

Role in Cloud Storage

Cloud storage providers depend on data storage capacity to keep up with the explosively growing needs of their customers. Through services like AWS, Microsoft Azure, and Google Cloud, businesses of all sizes can now store and process massive amounts of data.

These new carpooling platforms remove the need for physical infrastructure. These commercial platforms leverage petabyte-capable systems to drive everything from the files users back up to the applications that serve millions of users’ needs.

Enterprise petabyte-scale storage in the cloud provides companies the flexibility and scalability they need to reach their maximum potential. Businesses are able to scale data storage systems as their data expands, sidestepping the initial costs of on-premises options.

Cloud storage offers redundancy and helps with data recovery. This guarantees that even in the worst case—an outage or catastrophic system failure—your most critical information will remain safe and accessible.

That means their entire libraries of content are stored in cloud-based petabyte systems. This enables them to serve high-quality 4K streams to millions of users at the same time without sacrificing their speed or reliability.

Cloud storage is still one of the most cost-effective and practical options for businesses as data demands only increase.

Use in Big Data

To realize their full potential, big data analytics needs petabyte-scale storage where organizations can store, process, and analyze massive datasets.

These systems are critical for industries such as retail, where understanding customer behavior can inform more effective, targeted marketing campaigns.

With petabytes of data, companies are able to find more important patterns and make better decisions with the data. Aside from the straightforward logistical issues of managing such large datasets, things like retrieval speed and data integrity are major concerns.

The potential is huge. Take social media platforms, for instance — their user activity data is stored and processed in petabyte sizes.

By studying this data, they’re able to improve user experience and develop more effective algorithms to recommend content.

The other side of that enormous petabyte storage scale is helping power cutting-edge research. Scientific endeavors, whether it is genome mapping or climate modeling, depend on analyzing petabytes of data to reveal groundbreaking insights.

The global data volume is expected to increase to 175 zettabytes by 2025.

Consequently, petabyte systems will be more critical than ever in helping enterprises get a handle on big data.

RELATED: Big Data Basics: Understanding Big Data

Significance of a petabyte

Importance in technology

As it turns out, a petabyte is a lot more than the new digital storage big. As the 1,000 terabyte, or one million gigabyte, unit of measurement implies, its massive scale has fueled technology and innovation advancements.

Petabyte-scale storage is a necessity for industries that require massive data streams.

This is particularly the case with fast-moving fields such as artificial intelligence (AI) and machine learning (ML). These transformative technologies require large datasets to train and test their algorithms.

Without access to petabytes worth of data, their creation would be stymied and frankly, much less impactful.

To power AI that needs to examine millions of images and process years of video footage, it’s only with petabyte-level storage capacity that these tasks are even manageable.

Emerging fields such as autonomous vehicles and the Internet of Things (IoT) further leverage petabyte storage.

Never mind that self-driving cars depend upon a constant collection, processing and analysis of real-time data that includes navigation, traffic patterns and sensory data.

IoT networks create a steady stream of actionable data from devices, sensors and machines. Petabyte-scale systems enable scientists to efficiently store and process this colossal amount of information.

These breakthroughs show how petabyte storage is essential for driving the frontiers of today’s technology.

Researchers are exploring alternative materials to enhance storage capacities. For example, Microsoft’s Project Silica investigates the use of glass as a medium for data storage, potentially enabling the development of petabyte-scale hard drives.

Impact on industries

Petabytes have revolutionized industries, allowing them to process and analyze data at larger scales than ever before.

Streaming services such as Netflix and YouTube rely on petabyte-scale storage to deliver millions of hours of video content.

Behind the scenes, they make sure users everywhere can get smooth playback.

To put that into perspective, one petabyte could store 223,101 movies in DVD quality, making it little surprise that petabyte storage is a key infrastructure in these platforms.

In healthcare, petabyte storage supports advanced medical imaging and genomic research.

Hospitals and research institutions store and process high-resolution scans and genetic data, which are essential for improving diagnostics and developing personalized treatments.

The finance sector leverages petabytes for fraud detection and risk analysis, as large datasets enable sophisticated algorithms to identify patterns and anomalies.

A notable example is Facebook, which in 2010 managed a 21-petabyte Hadoop cluster for big data analytics, showcasing the power of petabytes in driving operational efficiency.

Its role in data processing

The availability of petabyte-scale storage has a direct relationship on the efficiencies of data processing systems. With platforms such as the Hadoop Distributed File System, organizations can make sense of petabytes of data with ease, keeping operations running nonstop.

Quick data access and retrieval are crucial for user experience on e-commerce websites and social media networks. This is critically important to the businesses in these sectors.

Petabyte storage reduces latency in data retrieval to near real-time, which is especially important for industries that depend on immediate data processing.

In 2017, CERN’s data center archived an astounding 200 petabytes in its tape library.

This storage capacity allows scientists to run deep analysis on complicated experiments at lightning speed. The ability to scale storage to petabyte levels means businesses can handle growing data volumes without performance gaps, ensuring long-term scalability.

Visualizing the scale of a petabyte

When I try to think about how to explain a petabyte, I’ve found that putting it into real-world terms works best.

One petabyte (PB) is equivalent to 1,000 terabytes or one quadrillion bytes of digital information.

This massive amount of data storage capacity can be overwhelming, but visualizing it helps put it into perspective.

Compare to digital media files

A single petabyte can store 20 million four-drawer filing cabinets full of documents. To get an idea of that scale, consider just how many songs you could store on a petabyte of data.

An MP3 file is about 1MB per minute of music. Even at an average song length of four minutes, a petabyte can store over 2,000 years of continuous music!

That’s basically the same thing as hitting the “play” button and walking away from your playlist for several thousand years.

One petabyte is roughly enough storage to contain 11,000 4K feature films!

When it comes to images, the scale is equally impressive.

If we assume an average smartphone photo is 3MB, a petabyte can hold more than 333 million smartphone photos.

If you could lay those photos end to end, they’d be over 48,000 miles long, almost enough to circle the earth’s equator twice.

Such massive storage capacity is a game-changer for content creators and distributors.

They can continue to work with extensive archives of video, music, and imagery, without the fear of running out of studio space.

Physical storage equivalents

Visualizing a massive amount of data, such as a petabyte, in terms of physical storage makes the scale incredibly concrete. To put that into perspective, it would take 223,000 DVDs to hold a petabyte of data.

If you could stack those DVDs end to end, they’d stand over 15 Empire State Buildings tall, illustrating the data storage capacity required for such vast amounts of information.

A third example from the tech world involves floppy disks. To store a petabyte, you’d need a staggering 746 million standard old 3.5-inch floppy disks, which would weigh over 14,800 tons—much more than the Eiffel Tower!

Books provide a different but equally helpful analogy.

If a book averages 1MB of text, a petabyte could hold the equivalent of one billion books worth of text, filling a warehouse the size of several football fields with hardcopy books and demonstrating the importance of effective data storage.

Provide real-world examples

Petabytes aren’t just academic—they’re a practical reality used today. The Library of Congress already oversees over 20 petabytes of data.

This multi-petabyte collection contains everything from encyclopedic cultural history to multimedia experimental archives.

Even large-scale science projects, like the Human Genome Project, work at a petabyte scale.

They comb through petabytes of genetic data to accelerate the course of research and medical breakthroughs.

Even the streaming services we all use every day are backed by petabyte-level storage to serve massive content libraries to global audiences instantly.

These examples illustrate the concrete benefits, from furthering scientific inquiry to improving our daily gaming and streaming.

Challenges with petabyte-scale data

Indeed, managing petabyte-scale data storage capacity is no small feat. It presents new challenges that require a combination of cutting-edge infrastructure, intelligent technology, and effective data management strategies. Let’s take a closer look.

Storage Infrastructure Needs

Building the infrastructure to store petabyte scale data is no small task. For one thing, infrastructure should be scalable and flexible.

That’s not just because data doesn’t simply stop growing—it continues to expand exponentially.

It takes an average of 30 months for the volume of enterprise data to double—an increase of 42.2% annually.

A petabyte-scale environment allows enterprises to easily grow storage capacity without drastic changes.

Data centers are a key component to all of this. They do offer the physical infrastructure to house the servers needed to handle these colossal volumes. Not every enterprise has the budget for dedicated on-premise data centers.

This is where cloud storage solutions come into play. Cloud-based platforms, such as AWS or Google Cloud, provide flexible and economical solutions designed to scale to increasing requirements.

This hybrid storage solution shines by employing on-site physical storage for sensitive information. It uses the flexibility of cloud solutions to improve scalability.

Reliability is the other big consideration. Data redundancy—storing multiple copies of data—provides an extra layer of protection against loss due to hardware failures or cyberattacks.

For example, if a particular server fails, redundant systems allow operations to continue without a hitch.

Consider it a safety net for companies who are building their future on data-heavy applications.

Data Transfer Limitations

Moving petabyte-scale data is a whole different hurdle. The absolute volume of data at play introduces bandwidth and speed limitations.

For instance, transferring a mere 1PB of data over a 100Gbps network link would take more than two hours!

Now consider companies operating on several petabytes—these delays accumulate quickly. Bandwidth constraints typically render standard migration approaches unfeasible.

Some firms rely on physical data transfer solutions, such as shipping hard drives, to expedite the process.

While that may seem retrograde, it is at times better to trust the speed of a truck than the speed of the internet.

These constraints have enormous repercussions on the way enterprises govern and transfer data.

For instance, firms performing worldwide operations might find it difficult to synchronize petabyte-scale datasets across various geographical locations immediately.

This can be detrimental to the success of projects ranging from analytics to customer experience, emphasizing the importance of developing more intelligent transfer strategies.

Security and Management Issues

Security quickly rises to the top of the list of considerations when managing petabyte-scale data.

Cyber threats, such as ransomware attacks, present serious threats to any enterprise with valuable data assets. Robust measures are imperative to protect this sensitive information.

Encryption, multi-factor authentication, and regular security audits are non-negotiable components of any petabyte-scale strategy. Here, data governance is key.

As datasets increase in size, the challenge of remaining compliant with regulations such as HIPAA or GDPR is compounded.

Without appropriate governance in place, they could face large fines or reputational harm.

Robust management practices, such as having unambiguous policies in place and using automated monitoring tools, can go a long way toward reducing these risks.

Future of petabyte usage

Growth in Data Generation

As we’ve noted time and again, the growth of data generation has been astonishing, and it continues to grow at a breakneck pace.

Each day, the digital universe expands by an astounding 328.77 million terabytes of data.

A few key trends are behind this explosive increase, particularly the rise of cloud computing and the consumption of higher quality media content across connected devices.

That’s because more of us are taking up connected devices, consuming more media content in higher quality and industries such as healthcare and e-commerce are making an increasing footprint. Virtual reality (VR) and extended reality (XR) technologies have exploded in use and interest.

They’re pushing the limits of what we think immersive experiences can be.

With the ascendance of 8K video, the need for data storage capacity keeps getting more critical.

Therefore, the need for huge storage capacities is evident. The bigger the data, the tougher the test for storage. Businesses need secure and scalable options to handle this growth, especially as new applications emerge that generate more complex datasets.

For example, one petabyte is like 20 million four-drawer filing cabinets full of text.

Managing these kinds of volumes is only possible with enormous storage infrastructures and effective data storage solutions that allow rapid deep dives and exploration through the data. Industries such as media, healthcare, and environmental research need to adopt petabyte-scale storage systems.

It’s not just an alternative anymore — it is an essential lifeblood of their activity. Last year, users fetched more than 9 petabytes of environmental data from us online.

The National Centers for Environmental Information (NCEI) shared this jaw-dropping statistic, pointing to a growing dependence on advanced data management capabilities.

Advancements in Storage Technology

As with any new technology, emerging technologies are rapidly making petabyte storage more efficient and accessible.

Cloud storage solutions, to take just one example, are widely available, affordable, and easily scalable.

Plus, no egress fees, a testament to how innovation is making massive storage cost-effective. These solutions are built with the unique needs of SMBs in mind, providing the flexibility SMBs need without stretching the budget.

Innovations in data compression are equally at the heart of these changes. With the help of advanced algorithms we’re able to shrink the physical space that data takes up and still retain the quality.

New storage systems—including solid-state drives (SSDs) and DNA-based storage—are changing the game in speed and storage capacity.

These innovations are revolutionizing how organizations tackle petabyte-scale data, guaranteeing that storage infrastructures stay nimble and economical.

Learn more about Microsoft’s Project Silica:

Emerging Trends in Data Handling

The way we handle data is changing just as quickly as storage technology. AI and ML have become essential tools in the era of petabyte datasets.

These emerging technologies are able to analyze, categorize, and optimize data at speeds that would be humanly infeasible.

This is critical as all industries are moving to petabyte and even exabyte storage—a single exabyte is equal to 1,000 petabytes.

In just a few years, the top media conglomerates will cross this threshold, making AI-driven management both practical and necessary for efficient scale.

Adaptability is equally important. As the petabyte era approaches, companies need to adopt solutions that will scale as their data needs expand.

For example, the rise of 8K video production will soon make 4K seem outdated, requiring storage systems to accommodate even larger files seamlessly.

By taking flexible and forward-looking data management strategies, organizations can be well-positioned to not only move with the trends but drive operational effectiveness at the same time.

Key Points to Remember

- A petabyte is a million gigabytes, or 1,000 terabytes. It is the equivalent of 1,000 terabytes or 1 quadrillion bytes, and it is popularly abbreviated as “PB.”

- One petabyte can store over 500 billion short, 1,000Kb pictures or over 4,000 high-definition feature length films.

- Petabytes are the workhorses of petascale and exascale data environments. You will see them in data centers, cloud storage and in industries such as healthcare, finance and entertainment.

- Handling petabyte-scale data requires advanced storage infrastructure. It demands comprehensive security protocols and effective data governance to ensure trustworthiness and security.

- The pace of data creation is increasing exponentially. Consequently, the need for petabyte-level storage will explode, driven by growth in AI, machine learning, and big data analytics.

- Future innovations in storage technology, such as enhanced compression and scalable cloud solutions, will make managing petabyte-scale data more accessible and efficient.

Frequently Asked Questions

What is a petabyte?

A petabyte (PB) equals 1,024 terabytes or about one quadrillion bytes of digital information, representing a massive amount of data storage.

How does a petabyte fit into the data measurement hierarchy?

A petabyte, which represents 1,024 terabytes, is also 1/1,024th of an exabyte, illustrating the enormous amount of data storage capacity in the traditional data measurement hierarchy found in cloud computing.

How many gigabytes (GB) are in petabytes (PB)?

There are 1,024 gigabytes (GB) in a terabyte (TB), and there are 1,024 terabytes (TB) in a petabyte (PB).

Therefore, to find out how many gigabytes are in a petabyte, you multiply the two values together: 1 PB = 1,024 TB × 1,024 GB/TB = 1,048,576 GB. Therefore there are 1,048,576 gigabytes in a petabyte.

What are some practical uses of a petabyte?

Big data industries require petabytes of data storage capacity to effectively manage and work with massive, complex datasets.

This essential storage underlies numerous cloud computing services, video streaming platforms, and scientific research.

Are Petabyte Hard Drives available?

A petabyte hard drive would need to store about one million gigabytes of data, which is equivalent to roughly 1,024 terabytes. These drives are likely to be initially used in enterprise computing rather than for consumers.

Samsung has hinted at the development of the world’s first petabyte-scale solid-state drive (PBSSD), aiming to “help servers reduce energy usage and maximize rack capacity and space.”

Why is a petabyte significant?

A petabyte is crucial for effective data storage and signifies big data competence, vital for artificial intelligence and high-resolution video storage.

Can you visualize the scale of a petabyte?

A single petabyte of data storage can hold more than 13 years of HD video or around 500 billion pages of typical text-based documents, showcasing an enormous amount of digital information.

What are the challenges with managing petabyte-scale data?

Controlling petabytes of data storage goes beyond just storage systems and power; it also involves sophisticated cybersecurity to ensure the integrity and safety of digital information from breaches.

What does the future of petabyte usage look like?

As data needs grow, effective data storage solutions involving petabytes will become more common, driving innovation in cloud computing and storage vendors.