What is Data Scrubbing and Why Should You Care?

On this page:

What is Data Scrubbing?

Define Data Scrubbing

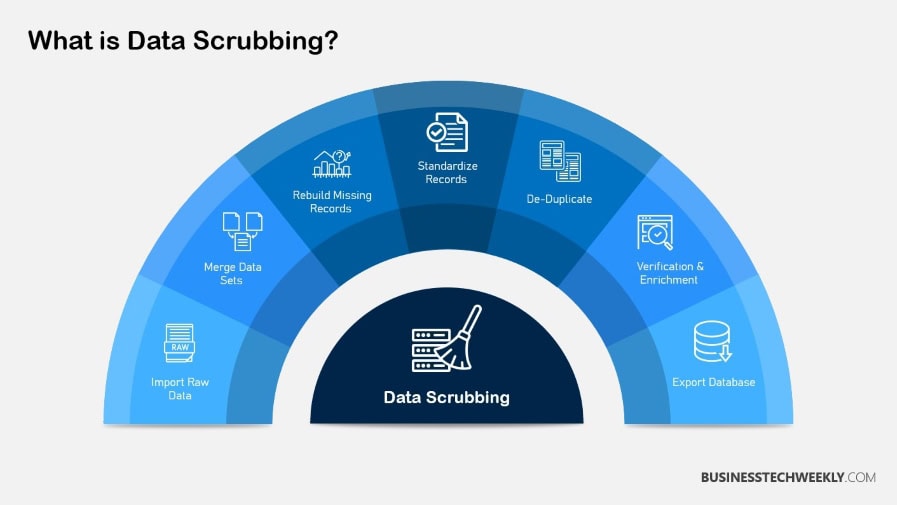

Data scrubbing is the process of identifying and correcting or removing corrupt or inaccurate records from a dataset. This step is necessary in the data management process to help protect data integrity.

When you scrub your data, you identify and correct or delete incorrect data entries, a step that can make or break the quality of your analysis.

We employ several methods in data scrubbing to ensure the highest level of accuracy. So validation and standardization are key to making sure that data entries are consistent.

For instance, standardizing data formats helps make sure that their data can integrate smoothly with other software systems, reducing future compatibility headaches.

With half of IT budgets allocated to data rehabilitation, it’s evident that organizations prioritize this process to maintain data integrity and reliability.

Importance of Data Scrubbing

Clean data is essential for effective analysis and informed decision-making, especially in the realm of data quality metrics. Quality data protects against the dangers of relying on outdated or incomplete information, which can lead to expensive errors.

In fact, businesses risk up to 20% of their revenue due to the negative impacts of data quality issues, emphasizing the need for a robust data cleansing process.

Moreover, data scrubbing not only ensures that organizations meet regulatory requirements but also mitigates the risk of legal penalties.

When data is reliable and consistent, organizations can confidently make data-informed strategic decisions that drive success and optimize their business operations.

Who Needs Data Scrubbing?

Data scrubbing is a necessity for businesses in every industry to ensure their data is accurate and up-to-date.

Data professionals, such as data scientists and analysts, benefit from clean data, as it allows for effective analysis and insights. They rely on these accurate datasets to carry out their roles effectively.

Organizations needing to manage extensive datasets should make data scrubbing a top priority to avoid data corruption and wasted resources. Employees can spend as much as 50% of their production time on manual data quality processes.

Additionally, investing in data scrubbing leads to an increase in productivity and stronger operational efficiency.

In the US alone, businesses lose $3.1 trillion annually due to poor data quality.

Data Scrubbing vs Data Cleaning

Data scrubbing is a more focused, tactical process. It’s also a crucial part of good data stewardship. It is much more narrow in scope and practice, specifically focusing on finding and correcting inaccuracies, inconsistencies, and mistakes within datasets.

This labor-intensive process is what makes data not just abundant, but also accurate and trustworthy.

Data cleaning is a natural, broad process that contains art and science. One of these effective methods is scrubbing, which works to ensure the overall quality of data is high.

Though both involve a focus on data quality, the scope and methodology for these two processes vary greatly. Data cleaning is a broad term that encompasses many activities—from deleting duplicate entries to standardizing different fields.

This holistic approach greatly improves the accuracy of the data.

Differences Between Scrubbing and Cleaning

Scrubbing zeroes in on particular data issues, addressing problems like incorrect spellings or misplaced data fields. It often employs automated tools capable of swiftly rectifying these errors, making it highly efficient for maintaining data integrity.

On the other hand, data cleaning tackles the bigger picture of data quality, often incorporating manual checks and evaluations to ensure thoroughness.

While scrubbing focuses intently on upholding data reliability, cleaning takes on a more expansive role, ensuring that datasets are not only accurate but also coherent and useful for analysis.

Unique Roles in Data Quality Assurance

Data scrubbing has its own unique role in ensuring data accuracy and reliability. It serves as the first line of defense, but this is key for making data actionable.

At the same time, data cleaning works in tandem with scrubbing to tackle broader quality concerns, fortifying the entire dataset’s integrity. Both processes are important for ensuring high standards for data management.

They enable companies to achieve better outcomes by informing their decisions with the best available data. Data scrubbing can be performed using a variety of tools, from basic Excel functions to more complex software.

Unfortunately, it can be a lengthy process to do so. It’s also critical to do regular scrubs, at least every three to six months, to maintain the quality of the data over time.

Impact on Business Processes

Customer Data Quality and Business Impact

Data scrubbing is a direct influencer in the world of customer data quality. When you take the time to refine the data your business is built on, you foster a space where awesome marketing campaigns are born.

With clean and accurate datasets, businesses can implement more specific, targeted marketing strategies, making it easier for high-quality leads to turn into successful campaigns.

This more targeted approach removes the bad business practices born from a lack of data driven insight.

In turn, it’s also saving businesses from wasting a mind-blowing $3.1 trillion annually. Keeping up with precise customer data is no longer just important – it’s necessary to provide tailored services that improve customer experiences and loyalty.

Yet only 57% of businesses are prioritizing their data quality.

With full data scrubbing protocols in place, you’ll be ahead of the curve and driving more positive business processes.

Organizations make (often erroneous) assumptions about the state of their data and continue to experience inefficiencies, excessive costs, compliance risks and customer satisfaction issues as a result.

Data Scrubbing in Data Management

Serving as one of the critical aspects of data management, data scrubbing helps keep your systems performing at their best and most dependable. It creates uniformity and dependability between datasets.

This transformation gives you the ability to use raw data as an incredibly powerful tool shown to drive your success.

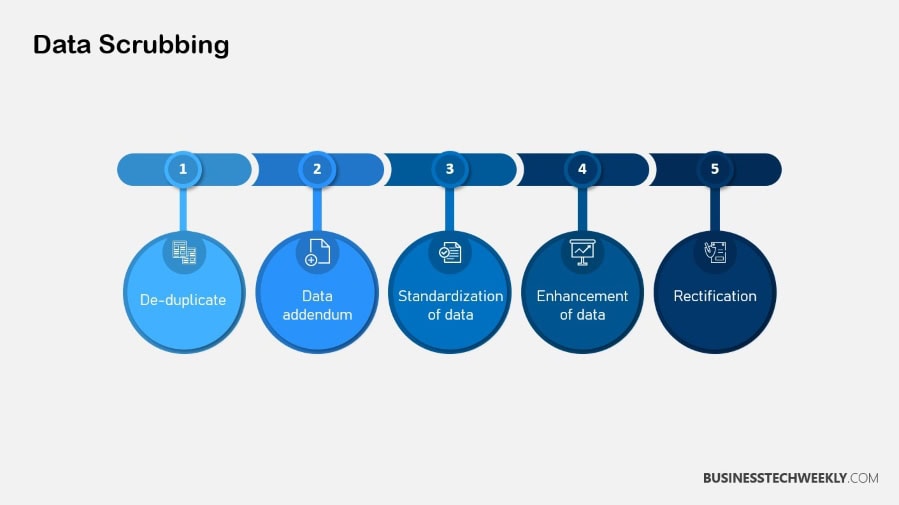

Consistent and proactive data scrubbing is key to ensuring long-term data integrity. It further aids in removing common mistakes like duplicates that frequently arise when combining datasets from different origins.

By regularly cleaning your data, you make sure that compliance standards are upheld at a time when regulations are more strict than ever before.

This multi-step, iterative process isn’t just a one-time undertaking but rather a repeatable workflow that ensures data is consistently accurate and trusted.

Integration and Migration Benefits

In the case of system migrations, data scrubbing becomes absolutely necessary. It opens the door to more seamless data integration, reducing the cumbersome and error-prone process that is often required to transfer data between systems.

By preparing datasets in advance through scrubbing, you guarantee they are fully ready for optimal use in new systems or applications. This preparation results in a smooth transition, enabling businesses to embark on the integration process with peace of mind.

Clean data will ensure that not only do migrations happen seamlessly, but they are also intentional, data-driven decisions with a consistent foundation for data analytics.

As a result, you turn your data into a valuable asset that fuels innovation and efficiency everywhere – on any surface, device or cloud.

Steps for Effective Data Scrubbing

When you begin the data scrubbing process, a step-by-step method is essential for producing thorough, trustworthy data.

Here’s the core steps involved in the data scrubbing process:

- Assess data quality to identify issues.

- Remove duplicate or irrelevant data entries.

- Correct inaccuracies through validation.

- Standardize data formats for consistency.

1. Remove Duplicate or Irrelevant Data

Identifying and removing duplicate records is a key step. It maintains the integrity of your data and makes sure every entry is adding value to your analysis.

Irrelevant data, however, can skew your results and result in misleading conclusions. Scheduled audits are key to identifying and removing duplicate or superfluous data points, keeping your data as clean as possible.

Whenever you’re working with customer data, ensure that each customer is represented just one time.

This protects against inflated metrics and guarantees reporting of accurate insights.

2. Avoid Structural Errors

Standardizing data types and formats is a critical component to ensuring consistency and avoiding structural breaks in the data. Such errors can make it difficult to perform and report on data analysis accurately.

Creating and applying detailed naming conventions and format guidelines will help. Choose your date format from the start!

Deciding if you want to use “MM/DD/YYYY” or “YYYY-MM-DD” now will save you a lot of trouble later on.

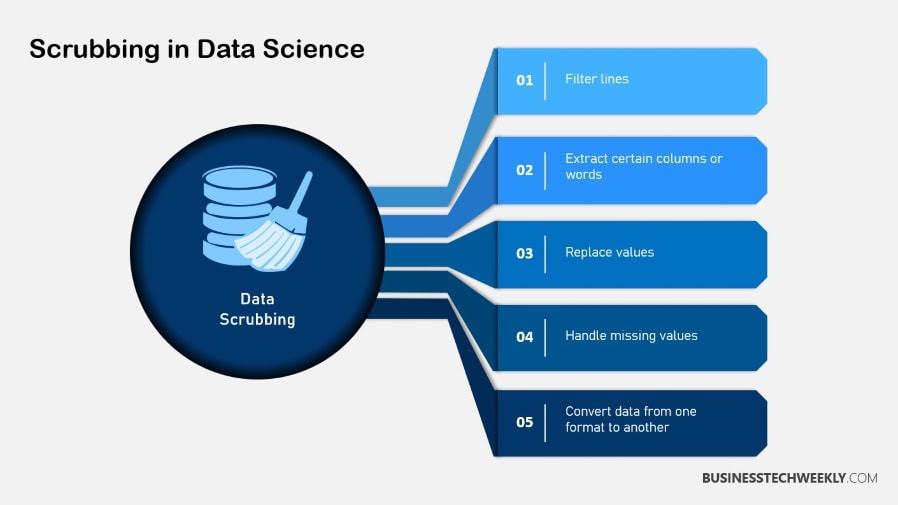

3. Convert Data Types Appropriately

Making sure that data types are correct is essential for proper analysis. Mismatched data types will result in processing errors and ultimately inaccurate results, undermining the reliability of your conclusions.

Monitor and update your data types to suit your analytic requirements.

This is a great practice to develop to ensure your data remains usable within your tools and workflow.

One simple example is converting text-based numbers into numerical data types to ensure proper calculations.

4. Handle Missing Values Effectively

Handling missing values is important for the overall integrity of the dataset. Advanced strategies like imputation or targeted removal can then be used to address those gaps.

Algorithms that predict and impute these gaps can be particularly powerful, keeping your dataset as complete as possible.

For instance, using machine learning models to estimate missing values based on available data can enhance the completeness of your dataset.

5. Inform and Collaborate with Team

Effective communication between all team members while data scrubbing is key. Collaboration encourages the exchange of information and helps to pinpoint where data quality problems may exist.

Collaboration makes data scrubbing processes more efficient, helping to achieve more effective outcomes and a common understanding.

Frequent check-ins and progress updates with both internal and external stakeholders help keep everyone aligned and informed.

Best Practices for Data Scrubbing

Data scrubbing, or data cleaning, is an essential part of keeping data high-quality. It is critical that any applications using the data can count on it as being reliable.

It’s the process of cleaning data, specifically locating and editing mistakes, discrepancies, or inaccuracies in data sets.

Here are some best practices to enhance the effectiveness of your data scrubbing efforts:

- Establish specific objectives to guide your data scrubbing initiatives. Having clear goals also allows you to identify and measure what success looks like. Perhaps most importantly, they make sure your data matches up with the larger company goals.

- Clearly define success. Aiming for a 5% increase in data accuracy will help focus your efforts. It’ll make you smarter about where to deploy your limited resources.

- Keeping a detailed record of the scrubbing process promotes transparency and accountability. Having a record of your process allows you to go back and find where you went wrong if something breaks.

- As a result, it obfuscates what actions were taken and why. This is important not only for you, but even more so in collaborative environments where multiple team members can be involved with the scrubbing process.

- Not all data issues are created equal. By focusing on your most critical issues, you address the most impactful mistakes first. This strategy results in tangible, near-term enhancements in data quality.

- For example, verifying and maintaining customer contact information can avoid costly communication errors and improve overall customer experience.

- Automation plays a significant role in efficient data scrubbing. Automating other redundant steps like formatting or deduplication can also save time and improve accuracy by minimizing human error.

- Akkio makes data cleaning simple and efficient. It might also be able to do things like transforming data based on simple natural language instructions, meaning you’ll need no advanced coding expertise.

Define Clear Goals for Data Quality

Establishing specific, achievable goals for data scrubbing is essential. Objectives should serve as a roadmap, guiding every cleaning to its ultimate goal.

Clear, defined goals—such as adopting a common data standard or minimizing duplicate data—provide a target to work toward.

Aligning these goals with business objectives is critical to ensuring that efforts to clean and maintain data actively drive improved performance across the organization.

Validate Changes and Monitor Quality

Validating changes during the scrubbing process is key to making sure that the corrections made actually improve data quality and don’t cause new errors.

By continuously monitoring data quality, you can identify emerging issues early on, before they have a chance to escalate into bigger problems.

Consistency checks like these are key to ensuring the quality of the dataset in the long run.

Schedule Regular Data Scrubbing Sessions

Data scrubbing is not just a one-off task, but an ongoing practice. Making data scrubbing a regular part of the process is essential.

Scheduling routine scrubbing sessions keeps data quality high over time. Making these sessions a regular part of your data management workflow will help make sure the data will always be the best it can be.

Removing even 1 percent of junk or incorrectly labeled data can free up huge amounts of resources.

Next, you can choose to throw it away or archive it in another format.

Tools for Data Scrubbing

When you’re out there on the mean streets of data scrubbing, having the right data cleansing tool may be the difference between success or utter failure.

Let’s take a look at some of the most popular data quality strategy tools out there.

Overview of Top Data Scrubbing Tools

Tools for data scrubbing differ significantly in functionality and features, providing different benefits best suited for unique needs.

Here’s a comparison table of some well-known tools:

Tool Name |

Features |

Benefits |

|---|---|---|

|

Code-free, handles multiple sources |

Timely data cleaning for businesses |

|

|

Integrates with Excel, advanced databases |

Versatile and adaptable |

|

|

Separates numbers from text |

Efficient data identification |

|

|

Data discovery, transformation, address verification |

Comprehensive functions |

|

|

Works with CRM and ERP platforms |

Seamless integration with enterprise systems |

Astera Centerprise is known as the best option for enterprises that require fast, code-free data scrubbing from multiple sources. Winpure has an amazing flexibility.

It can tackle the simplest Excel sheets to the most complex databases, making it ideal for dynamic environments.

OpenRefine’s capacity to tell the difference between numbers and text is essential in more advanced data scrubbing work.

Features of Effective Tools

Comprehensive data scrubbing tools should include capabilities that help you achieve a deep cleanse and ongoing quality upkeep. The capability to easily find and cleanse data, exemplified by SAS Data Quality, is a must-have.

Solutions such as Oracle Enterprise Data Quality provide integration with CRM and ERP platforms, like Oracle’s own Cloud-based offerings, creating an efficient, integrated workflow.

Detecting and correcting duplicate data entries is essential, as duplications are the top reason for data being of low quality.

Benefits of Using Specialized Tools

There are many advantages to utilizing specialized tools for data scrubbing. They scrub their data and keep it clean and reliable. This is important since 75% of enterprises deem high quality data as key to their business success.

Recommended every three to six months, regular scrubbing helps ensure that your data remains high-quality over time.

When organizations utilize tools such as SAS Data Quality and Oracle Enterprise Data Quality, the processes become more efficient and effective.

This greatly simplifies the overall task and saves you a lot of time.

Key Points to Remember

Data scrubbing allows you to identify trends and correct mistakes.

By following simple steps and sound practices, you make sure your data is trustworthy.

- Data scrubbing also plays an important role in identifying and fixing errors in data sets, improving the overall accuracy and reliability of data.

- Having clean data is critical to performing analytics effectively and making data-driven decisions, saving everyone the time and money that comes with bad data quality.

- Organizations in every sector, especially those dealing with expansive datasets, need to make data scrubbing a top priority to avoid data corruption.

- Data scrubbing applies specific techniques to improve the quality of the data in aggregate.

- Frequent data scrubbing sessions help prevent long-term data degradation, which can be costly to operations and frustrating to customers.

- Using dedicated data scrubbing tools can make the process faster and more efficient, allowing for a more effective and accurate data quality assurance process.